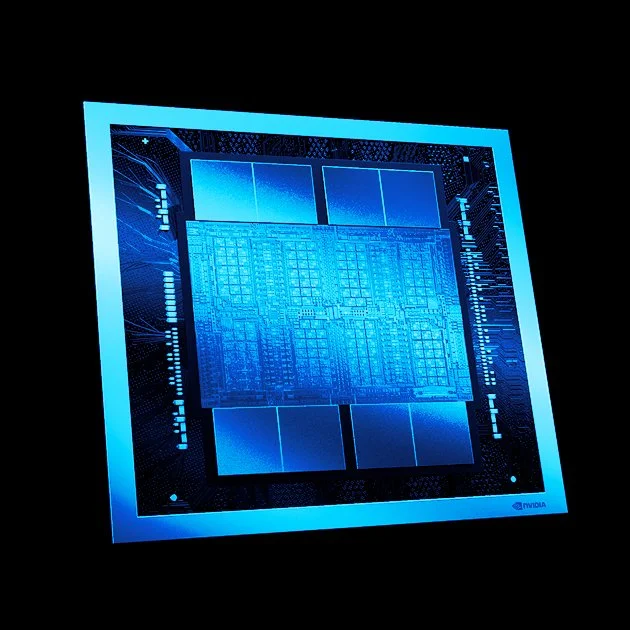

NEXT-GEN PERFORMANCE AVAILABLE TODAYB300 SXM6

Built on NVIDIA’s most advanced Blackwell-Ultra architecture, the B300 SXM6 GPU delivers a new tier of computational power designed for frontier-scale AI. With 288 GB of HBM3e memory per GPU and industry-leading NVLink 5 interconnects, the B300 enables faster model training, deeper context windows, and more efficient inference than any generation before it.

B300 SXM6 Performance Highlights

288 GB

High-Bandwidth Memory

(HBM3e) per GPU

1.5x Faster

Dense FP4 throughput vs B200 GPUs

1.8 TB/s

GPU-to-GPU interconnect bandwidth

2-5x

More Throughput vs. Hopper-based systems

QumulusAI Server Configurations Featuring NVIDIA B300 SXM6

Our B300 SXM6 systems are engineered to support the next generation of AI workloads—offering peak performance, massive memory capacity, and best-in-class parallelization for LLMs, diffusion models, and real-time inference.

GPUs Per Server

8 x NVIDIA B300

Blackwell Tensor Core GPUs

System Memory

3072 GB DDR5

@ 6400 MHz

CPU

2 × Intel Xeon 6767P (64 Cores / 128 Threads) | 2.4 GHz (base) / 2.8 GHz (boost)

Storage

30 TB NVMe SSD

vCPUs

256 virtual

CPUs

Interconnects

NVIDIA NVLink 5 — Up to 1.8 TB/s GPU-to-GPU bandwidth

Ideal Use Cases

Foundation-Model Training at Scale

Handle trillion-parameter architectures, complex multi-node training, and advanced reinforcement-learning workloads with headroom to spare. The B300’s 288 GB of HBM3e memory and NVLink 5 bandwidth make it ideal for distributed training across massive datasets.

Reasoning and Inference for Frontier LLMs

Deploy next-gen LLMs, RAG pipelines, and multi-modal agents with near-real-time performance. The B300 accelerates dense inference and contextual reasoning tasks while minimizing energy and memory overhead.

High-Performance Simulation and Scientific Modeling

Power advanced physics simulations, digital twins, and climate or material modeling with unprecedented precision. The B300’s compute density and memory capacity enable scientists to iterate faster and at higher resolution than previous generations.

Why Choose QumulusAI?

Guaranteed

Availability

Secure dedicated access to the latest NVIDIA GPUs, ensuring your projects proceed without delay.

Optimal

Configurations

Our server builds are optimized to meet and often exceed industry standards for high performance compute.

Support

Included

Benefit from our deep industry expertise without paying any support fees tied to your usage.

Custom

Pricing

Achieve superior performance without compromising your budget, with custom predictable pricing.